Unveiling RAG Systems: A Practical Exploration

Introduction

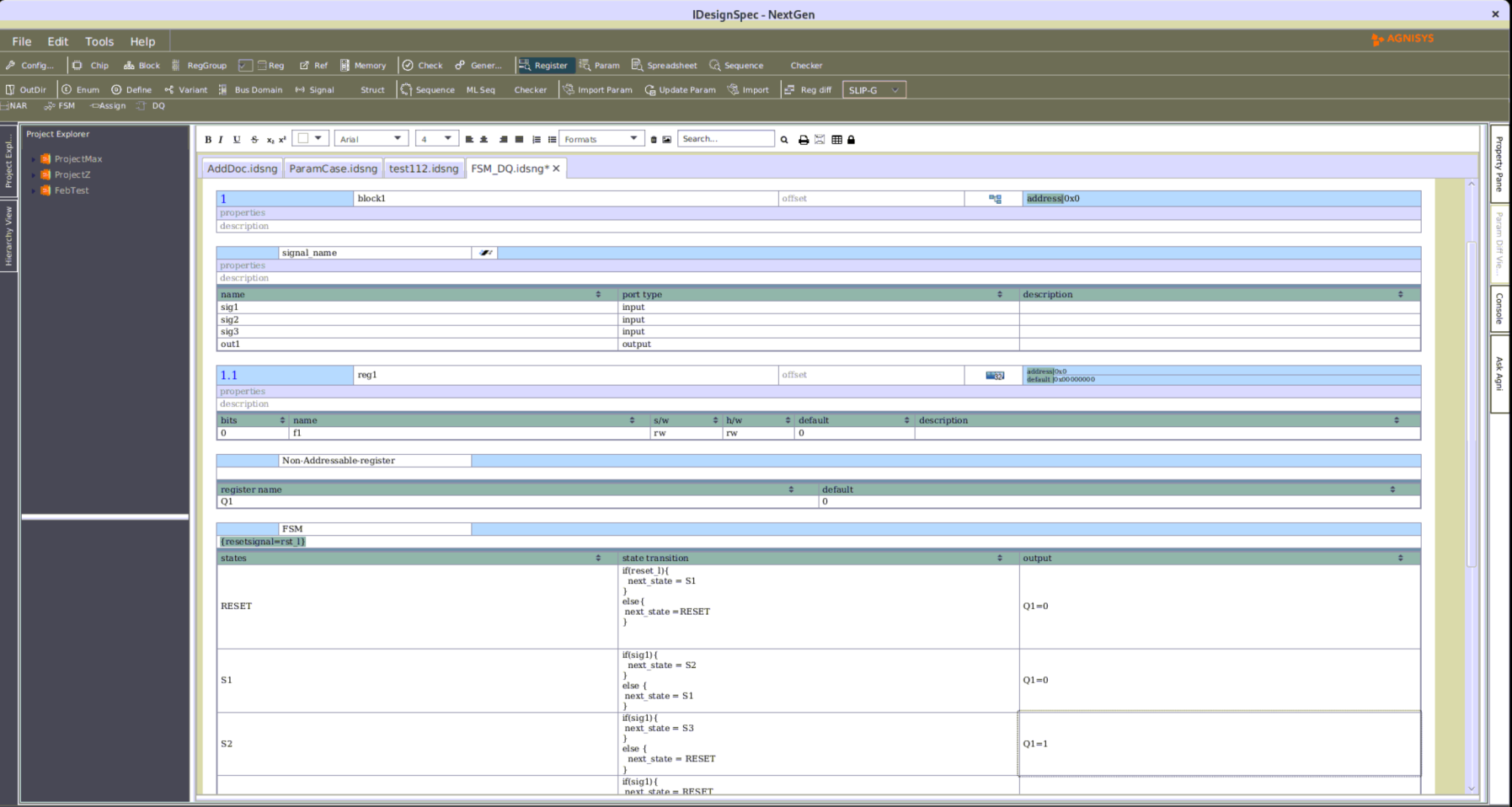

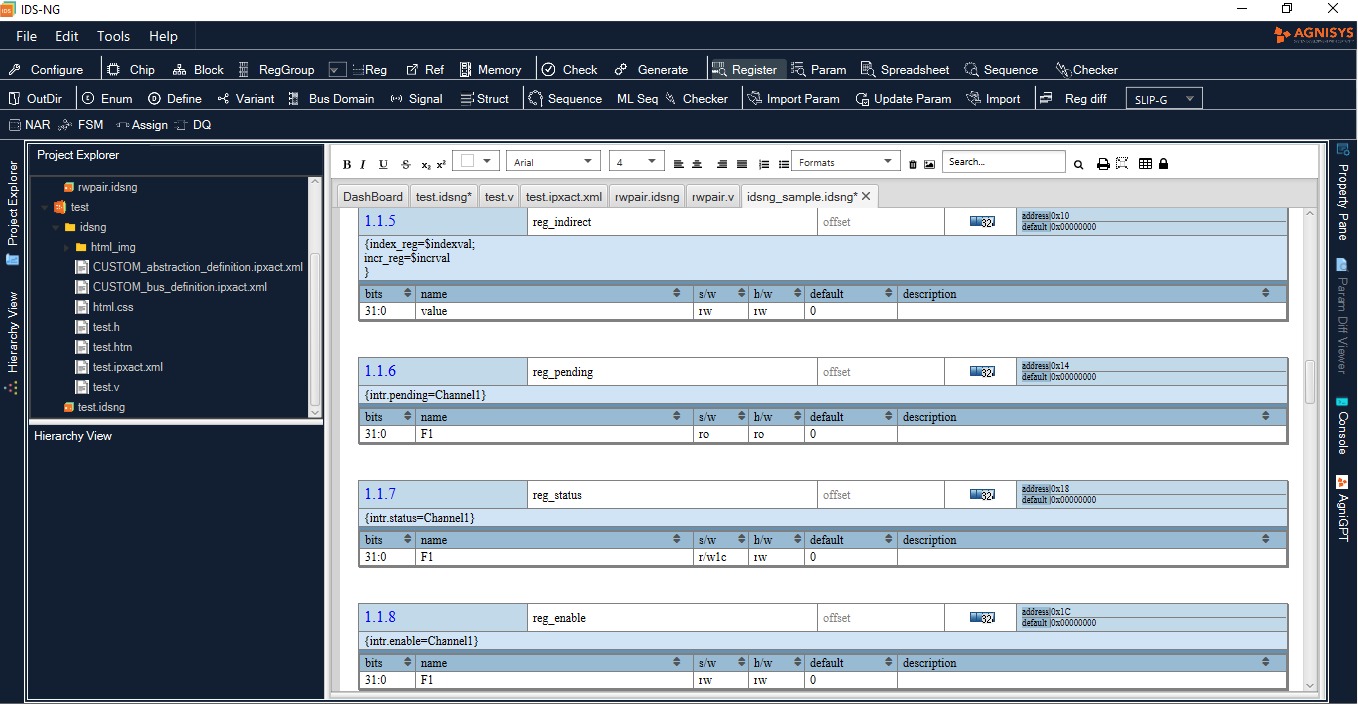

As our product portfolio has evolved over the last two decades, so has the capabilities of the various tools and consequently the documentation. We recently encountered a pressing need to develop a question-answering system capable of effectively addressing inquiries pertaining to our company’s proprietary tool documentation. Our paramount concern throughout this endeavor was to safeguard sensitive data from any inadvertent disclosure. In our quest for a suitable solution, we discovered that the Retrieval-Augmented Generation (RAG) model offered an ideal fit for our requirements. Leveraging this technology, we successfully engineered a question-answering engine. In this article, I will share insights gained from our experience with RAG systems, focusing on crucial design considerations and the intricate decision-making process involved in their implementation.

We were able to introduce the new system in our products and make them available for our customers.

Why RAG?

In the realm of language models, there’s a significant challenge: many available models lack training on specific datasets, and companies are often hesitant to fine-tune them due to the risk of leaking proprietary data. However, providing relevant context not only mitigates this risk but also reduces the potential for generating incorrect information.

Building RAG Systems

Creating a RAG (Retrieval-Augmented Generation) system isn’t as daunting as it sounds. Thanks to frameworks like LangChain and LlamaIndex, you can swiftly set up your application. But the real puzzle lies in designing the RAG system itself.

There are various components of a RAG system that require attention during the design phase:

Indexing

- Retrieval

- Generation

Evaluation

We will discuss each of these components in detail:

Indexing

Indexing in terms of RAG involves organizing a vast amount of text data to enable the RAG system to quickly locate the most relevant information for a given query. This process entails converting data from various sources into vector representations. The rationale behind this is that similar data will have similar vector representations, while dissimilar inputs will be far apart in the embedding space.

When designing the indexing step, several crucial design choices must be made:

- Choice of Index Model

This is a critical decision, as the quality of embeddings generated greatly influences the retrieval process. It’s essential to choose the embedding model cautiously for your RAG system. Once selected, the same model must be used during query time for optimal performance. Any change in the model would necessitate reindexing all the data, which could be prohibitively expensive for large datasets. Additionally, consider the speed of embedding synthesis, as a slow model could lead to delayed responses, negatively impacting the user experience.

- Text Splitting Method

Before input texts are indexed, they are divided into chunks, each of which is indexed separately. Various strategies can be employed for text splitting, such as by sentences, markdown, paragraphs, or semantically. The choice of strategy determines the text retrieved during synthesis time and should be dictated by the specific task and data at hand. Experimentation may be necessary to determine the most effective approach.

When chunking the text, it’s important to experiment with certain hyperparameters to determine the optimal values, such as chunk size and overlap. This choice affects the precision of generated embeddings. Smaller chunks ensure precise retrieved information but risk omitting relevant details, while larger chunks may contain necessary information but miss out on granularity. Increasing the number of chunks may also escalate query costs, which can be problematic when working at scale, impacting both latency and cost.

- Choice of Vector Database

This decision should be based on factors such as cost, features offered, and integration with LLM development frameworks like LangChain or Llama Index.

By carefully considering these design choices during the indexing phase, you can optimize the performance of your RAG system and enhance the user experience.

Retrieval

Retrieval refers to the process of finding relevant chunks in the vector database that match the user’s query. When designing the retrieval stage, several considerations come into play:

1. Retrieval Strategy

Using a semantic search is the simplest way to retrieve documents. However, this approach may underperform as it might miss important keywords from the query. A solution to enhance retrieval is to employ Hybrid Search.

After retrieving relevant documents, it’s possible that documents with lower similarity may contain more pertinent information to answer the user’s query. In such cases, a document reranker is employed to re-prioritize the documents and select the top-k out of them. However, caution is needed while using a reranker, as it may introduce latency and additional cost per query.

Besides choosing a strategy, deciding on hyperparameters for the retrieval process is crucial. Most retrieval strategies involve selecting top-k chunks and setting a similarity cutoff. Top-k determines the number of chunks to retrieve, while the similarity cutoff ensures that no retrieved chunk has a similarity value below the threshold.

2. Query Transformations Sometimes, the phrasing of the query may not be optimal for finding relevant data to support the answer. One solution is to perform transformations on the input query before retrieval. However, this approach may slow down retrieval and increase the cost per subquery. Different methods of query transformation include:

Multiquery: Prompting LLMs to generate similar queries that convey the same meaning but are worded differently. This helps retrieve a more diversified set of chunks in the embedding space.

Query Rewriting: Particularly useful when the system is used by non-native speakers.

Sub Query Generation: Dividing the user query into parts and using the subqueries as separate queries to LLM to obtain the answer.

Hypothetical Document Embedding: Allowing LLM to generate a response based on the query alone, without any context, and then performing retrieval based on both the queries and the generated answers. This approach assumes that the response, even if incorrect, will share some similarities with a correct answer.

Generation

During the generation phase, several crucial considerations come into play, including the choice of model, system prompt, and system hyperparameters.

Model Selection: The choice of model plays a significant role in determining the quality and effectiveness of the generated responses.

System Prompt: The system prompt is a message added to the context and query sent to the LLM. It essentially “pre-programs” the chatbot to behave in a desired manner, influencing the tone, style, and content of its responses.

System Hyperparameters: Important system hyperparameters include the context window size and maximum response length.

Context Window Size: This parameter determines the amount of preceding context provided to the model. An improper context window size might lead to a degradation in the quality of responses.

Max Response Length: Setting a limit on the maximum response length is a crucial design choice. It not only affects the quality of responses but also influences the cost per query, especially in resource-constrained environments.

By carefully considering these factors, the generation process can be optimized to produce high-quality responses tailored to the specific requirements and constraints of the application.

Evaluation

Evaluating RAG systems or LLMs in general presents a non-trivial challenge. However, when assessing RAG systems, a structured framework can guide the evaluation process. The RAG system comprises three key components: Query, Context, and Response, collectively referred to as the RAG triad.

To effectively evaluate RAG systems, the following performance metrics can be defined:

Answer Relevance (Response ↔ Query): Assesses whether the generated response is pertinent to the query posed by the user.

Context Relevance (Query ↔ Context): Determines the relevance of the retrieved context to the user’s query.

Groundedness (Response ↔ Context): Evaluates whether the response is well-supported by the provided context.

This evaluation task falls within the realm of LLM self-assessment and is comparatively less complex than generation tasks. While this approach generally works well for generic use cases, it may encounter challenges in domain-specific scenarios with technical content. In such cases, the LLM may struggle to provide evaluations in a desired manner.

Conclusion

While we have aimed to provide a comprehensive overview of the design choices involved in crafting a RAG system, this list is by no means exhaustive. Given the dynamic nature of this field, characterized by ongoing research and rapid advancements, new insights and techniques continue to emerge regularly. As such, designing RAG systems remains an active area of exploration and innovation, promising further developments in the days ahead.