Using IVerifySpec to test IDesignSpec

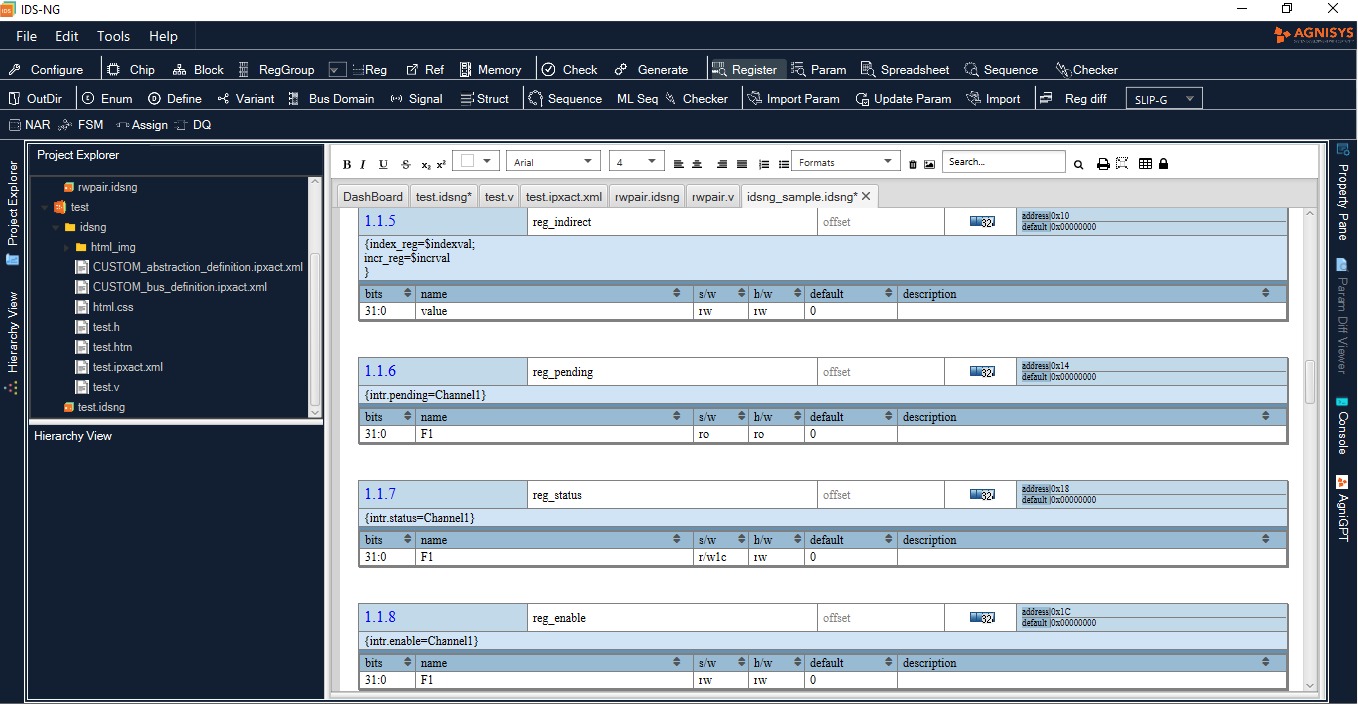

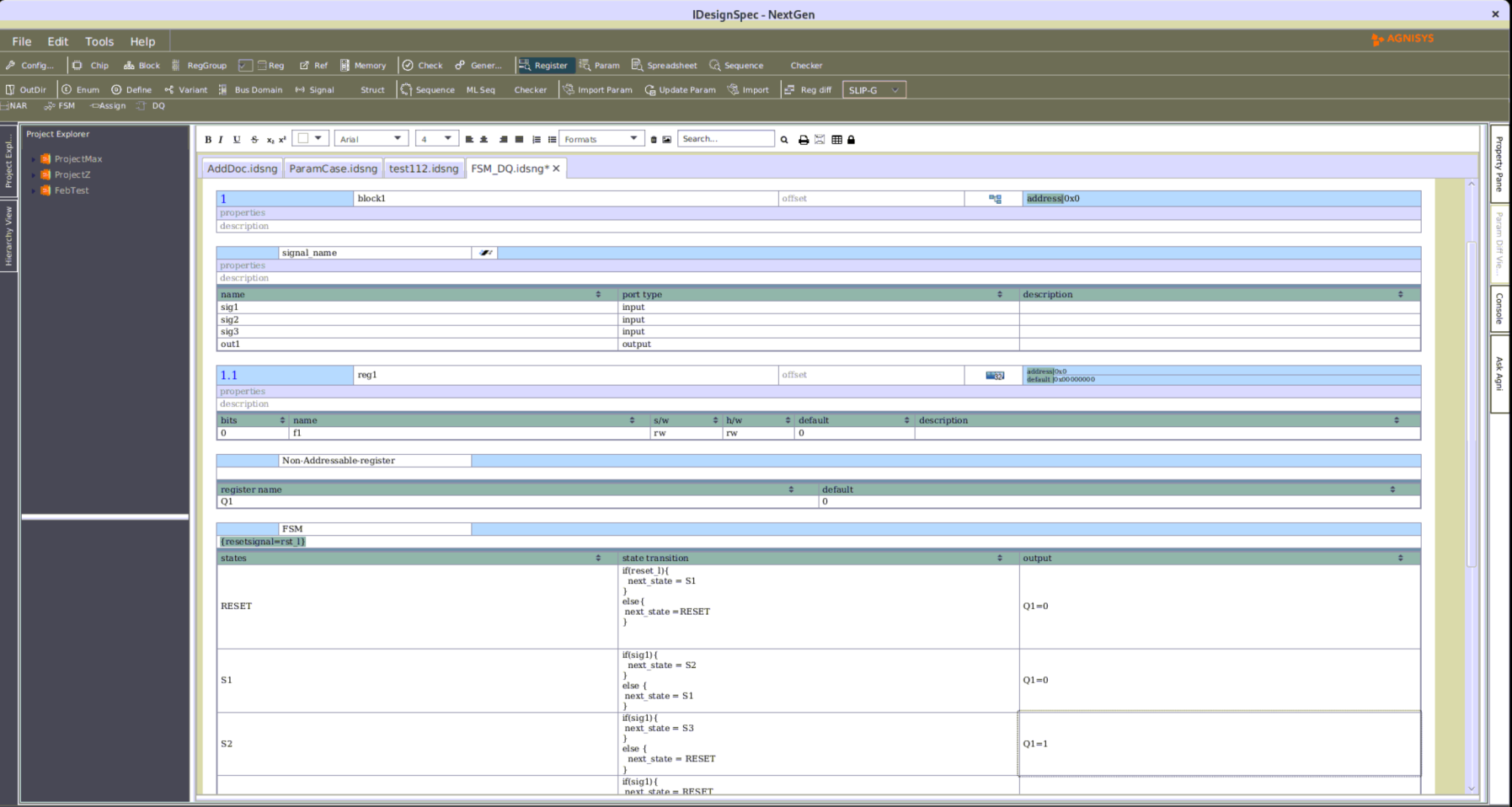

IDesignSpec generates several outputs from a single spec. We started out as a simple tool that just dealt with registers. After 6 years the tool has metamorphosed into an Executable Spec tool for Digital design.

This article is about the complexity of ensuring quality of such a tool.

The tool generates a number of downstream outputs and these include synthesizable RTL of logic designs and its appropriate test benches and different standard formats. The generated synthesizable logic RTL should be checked for compilation and synthesis error. Also with any change in the specification of the input spec a different view of the downstream output is formed. Each of the downstream outputs created from the same spec need to be checked for its error.

Testing such a tool is a tedious and demanding task and a major part of the time is dedicated to the analysis of the status of the test. We have a regression environment which consists of hundreds of tests which verify the transformation of the tool to a latest version from a previous one.

We use our own Verification planning and management tool IVerifySpec for the verification of IDesignSpec. This is the case of Doctor eating his own medicine since we created IVerifySpec for the sole purpose of ensuring that a product is verified to meet its specs. What better way to prove that it does so, than to use IVerifySpec to verify IDesignSpec.

IVSExcel is a plugin for Microsoft Excel that enables a verification team to plan and analyze verification progress right inside Excel.

A pair of XML file for verification is generated when the regression is run, one for the plan and the other for displaying the results of the tests. A Plan for IDS Regression is created in IVSExcel by importing the plan XML file into the plugin and the status of the Regression can be brought into IVS by annotating the result file. When a regression is run for testing the next version of the tool, all the changes with respect to the previous version can be brought into IVSExcel again through import and annotate of XML files but in an update mode.

We were able to easily detect the trends and issues in the IDS regression in IVSExcel with the features that highlights the updates in the regression and also by filtering of test status with the help of properties and expressions involving properties.

Here are some of the features that IVSExcel provides and their use to the end user.

Table 1: IVS Features

| Feature | Benefit |

| Create verification plan from Simulation database | User can either create plan in Excel by identifying the key metric used or create the plan from the simulation results from any vendor database. |

| Update verification plan with coverage reports and test reports. | Not only coverage reports can be annotated onto the plan, but also test results can be annotated. |

| The annotation can be done either as a new dataset or a merge into an existing dataset. | A merge, as opposed to new data set, is very useful in seeing what has changed compared to the last dataset. This is extremely useful for regression analysis. |

| Update verification plan and results | The same plan can be updated, this eliminates the need to create new Excel files, which in turn helps manage the large amount of data generated in verification. |

| Highlights changes in Plan and results in update mode | Easy detection of trends and issues in verification process. |

| Message display and capability to view log files from within the tool | Error messages from live regression and simulation environment can easily be displayed in the plan.Links to log files are provided enabling user to refer to the source files, log files, html pages of Coverage reports etc. |

| Metrics and Metric groups | Metric is a key aspect of measuring verification effectiveness. It indicates test status, functional coverage, code coverage assertions etc.Metric groups can be used for hierarchy in the verification plan. |

| User defined properties of metrics | Enables to summarize results based on these properties. User can filter results with the help of expressions involving these properties and this enables users to quickly identify the root of the problem. |

| Consolidation of results by metric groups. | The ability of the tool to present data in a consolidated form enables users to understand a large amount of data. |

| A simple web based interface to view the plan and its associated results on a website | This helps the verification team share the plan and results on an intranet. It also enables the users to analyze the data on the website using charts. |

Sample Verification Plan

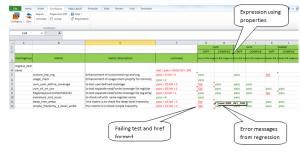

Below is the snapshot of a sample verification plan, which contains metrics that are used to capture the status of some tests. There are 5 tests in this plan. Highlights of this verification plan are:

- The summary column shows the status of the tests, the error messages from the environment where these tests were run

- For a test there can be multiple results and whenever a result fails, the log files were referred directly from the tool through hyperlinks.

- Additional columns were added to the right of the summary column, for summarizing results according to the result properties.

For example, in column J, the header outfile=UVM && stage=compilation is the expression formed by properties outfile=verilog and stage=compilation. Hence this column tells the user whether “Verilog” output for a particular test is compiling correctly or not.

e.g. in the snapshot below for test named “uvm_user_define_coverage” in row 7, the “UVM” output compiles correctly as shown in column J but it differs from the golden file as shown in column I.

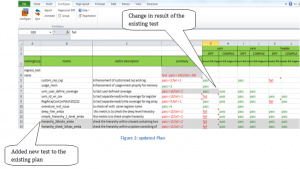

Updating the Plan and the results

Some new tests and their results were added into the above plan by using the import feature in merge mode. For updating the results Annotation was done in “merge data” mode into an existing dataset. Highlighted areas in grey shows the newly added tests and the existing tests with changes in their results.

For the existing test in row 7, there is change in the summary column F, and also in the filtered result shown by header“outfile=uvm && stage=diff”in column I, user can easily figure out that the UVM output of that test now does not differ from the golden file.

Summary

IVerifySpec enabled us to create a highly flexible and dynamic Plan for the Regression Analysis of IDesignSpec. We could easily detect issues in the regression saving a lot of our time and effort. By using the user defined properties for metrics and their expressions in IVerifySpec we were able to filter out the regression status of the multiple outputs created from a single test.

IVSExcel enables better development of Verification Plan, its execution and easier ways to monitor the project status.

We feel that we would not have been able to deliver high quality products if we did not use and deploy IVerifiSpec.