Teaching the computers to teach themselves

Why Machine Learning Matters

Machine learning (or “ML” for short) may have begun life as something of a buzzword, but it has long since proven itself to be anything but. At its core, the term itself describes a situation where computer scientists use statistical techniques to essentially “learn” with data, without being specifically programmed to do so. In essence, you build a system to complete a specific task. The more data you feed into the system, the better it gets at that task – all without requiring human beings to make those improvements on their own. This seemingly straightforward concept has led to a massive disruption in the field of electronic design, especially over the last few years. It has already led to the creation of a brand new class of embedded systems that use both machine learning and deep learning, otherwise known as ML/DL systems.

The Power of Machine Learning: Breaking It Down

Although it’s still a relatively new concept in the modern context, machine learning is already having a massive impact on just about every industry. Businesses regularly use machine learning systems powered by customer data to help improve satisfaction whenever possible. If you can power a machine learning system to analyze user activity in real-time, you’re therefore in a better position to identify the conditions for a potential account closure before it happens. You can then proactively reach out to that customer and save the account before they head off into the arms of a competitor. Or, a financial services company might use machine learning to better respond to changes and fluctuations in the local market as they happen. The system itself can quickly identify trends and patterns that would have otherwise went unnoticed, alerting humans to the idea that something is about to happen so that they can react in the most appropriate way.

But one of the most famous examples of machine learning at play is one that you’ve been carrying around with you in your pocket for years without realizing it. Voice recognition systems – with Apple’s Siri being a prominent example – use both machine learning and deep neural networks to essentially “imitate” human interactions. It’s why Siri appears to get slightly smarter the more you use it. As it gets to know more about how you speak as an individual, it comes to better “understand” the nuances and the semantic choices that you’re making. It learns what you mean when you say things like “home” or “work” and the accuracy of the answers provided to your questions improve as a result.

But what a lot of people don’t realize is that these ML/DL applications actually require what is referred to as Hyper Parameters. This itself requires engineers to choose a specific set of parameters for the learning algorithm that, when fine-tuned on an ongoing basis, are used by the model to not just solve a machine learning problem but to solve it in the fastest and most efficient way possible.

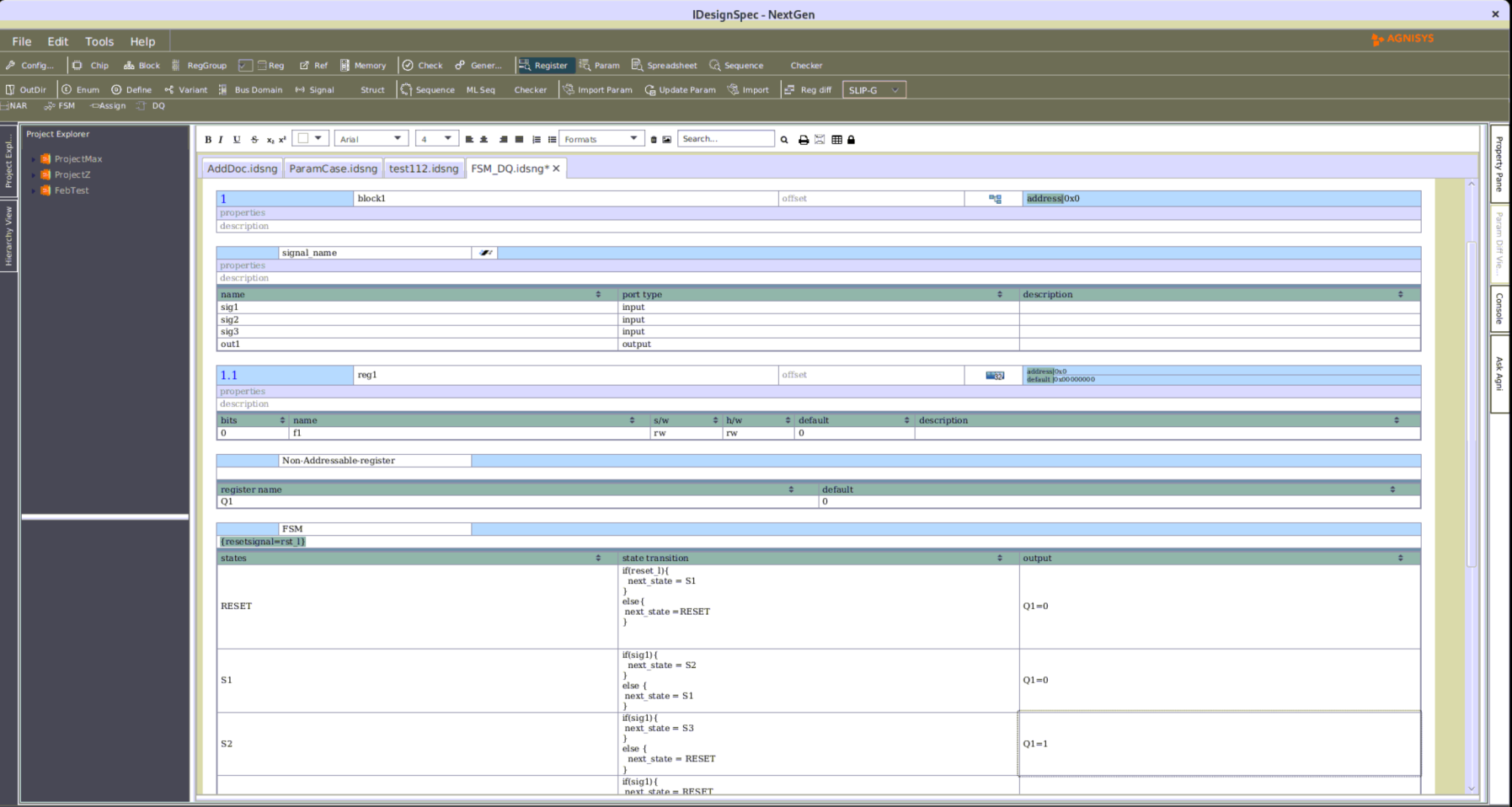

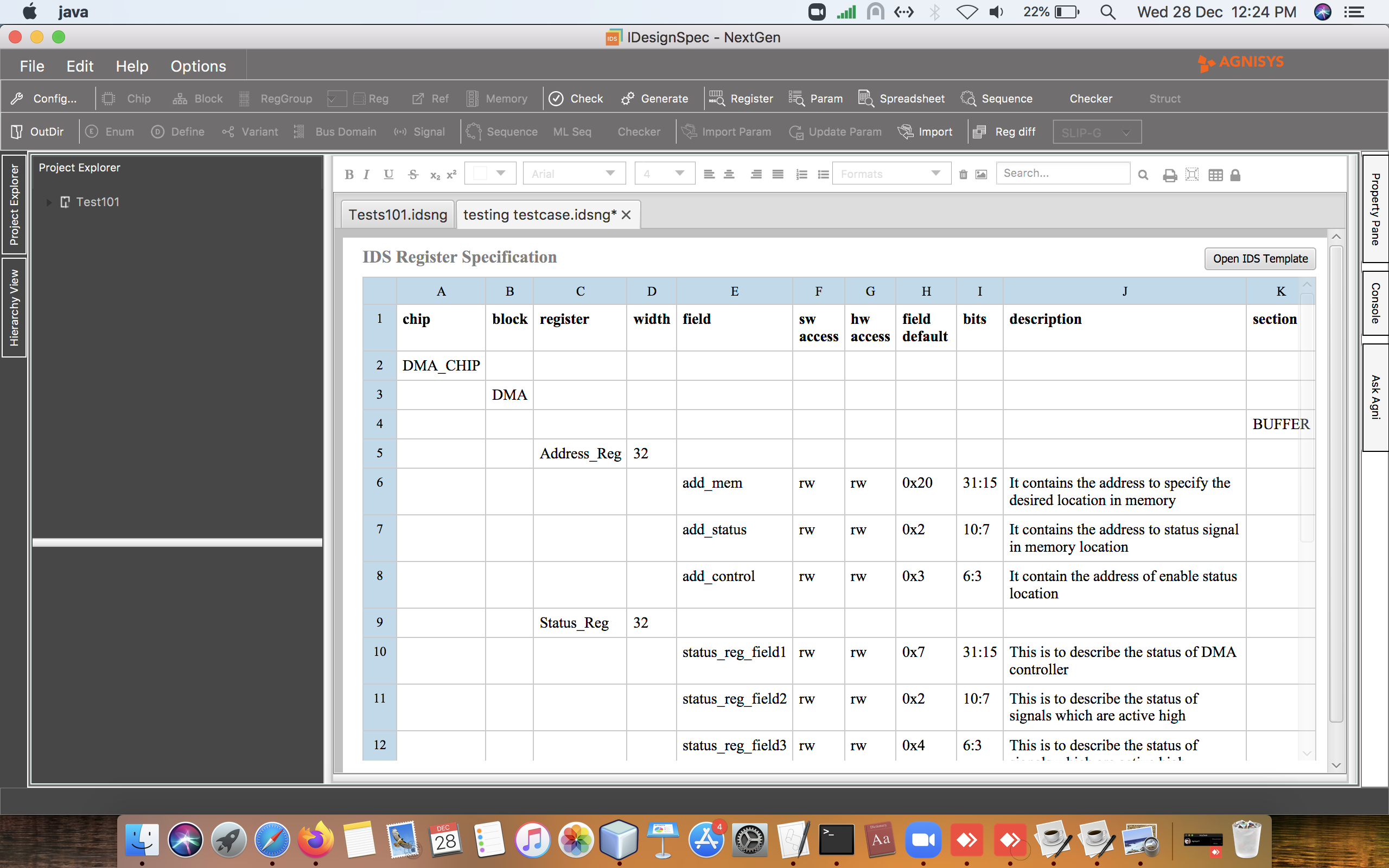

In the specific example of ML/DL chips, these hyper parameters can easily run into the thousands – if not into the tens of thousands – because each layer of the neural network could potentially be controlled and programmed on an individual basis. In the software stage, these hyper parameters are fairly easy to control and experiment with. When machine learning applications are then implemented in hardware, these hyper parameters are usually converted into register settings that then make them addressable via a register bus.

For the sake of example, consider the idea of a Convolutional Neural Network – or CNN, for short. In this case, a chip designer may need to be able to dynamically control the topology of the network, the strides or padding of each layer, or some combination of those two things. Based on that, it is completely reasonable to think that the designer would then be able to control each of those parameters dynamically for each layer.

In the case of something like a Recurrent Neural Network (RNN), it is possible that the choice of each cell – be it a Long Short-Term Memory (LSTM) or Gated Recurrent Unit (GRU) could both be dynamically controlled. This also extends to their activation functions, be they tanh or softmax.

ML/DL – The Future is Finally Here

All of this is to say that the task of using machine learning is both fascinating and challenging, but as the old saying goes – anything worth doing is worth doing right. Even at a cursory glance, it’s easy to see just how valuable machine learning will become a concept as it continues to evolve over the next decade. At Agnisys, we’re proud of the headway we’ve made with home grown machine learning algorithms. Applying these principles to the IDS NextGen™ multi-platform product has helped put users in a better position to create SoC specification at an enterprise level, which itself is already creating a measurable impact that is changing people’s lives for the better.

When you think about just how far machine learning has come in only the last few years, it’s truly exciting to think about what the next few may have in store for us all.

We invite you to contact us directly to learn more about IDS NextGen.